How to Write Good Survey Questions for Free Online Survey Tools

Writing good survey questions is the difference between getting clear, trustworthy insights and getting noisy data that leads you in the wrong direction.

Even small wording choices can introduce bias, confuse respondents, or produce answers you can’t act on.

That’s why survey design best practices matter, whether you’re running a simple customer feedback form or learning how to write good survey questions for NPS surveys.

This is also where a platform like Polling.com gives you a noticeable edge.

Its built-in question templates, bias-free phrasing suggestions, and intuitive preview tools help you write good survey questions even if you’re not a researcher.

In this guide, you’ll learn how to write good survey questions step by step, including wording techniques, layout survey best practices, examples of biased survey questions to avoid, and how to use free online survey tools like Polling.com to design better questionnaires with less effort.

1. Start with a Clear Survey Objective

Before writing any question, you need to know what is a survey and what you want to learn. A good survey starts with a focused objective, not a vague guess or a wishlist of unrelated topics.

Define What You Want To Learn (Rather Than What You Think You Want)

A concern when designing a survey is jumping straight into question writing without clarifying the core purpose. Instead, ask yourself:

- What decision will these answers help us make?

- What problem are we trying to solve?

- What insight is missing right now?

A precise objective keeps you from writing overly broad, biased, or unnecessary questions.

For example, “understand customer satisfaction” is too vague. A clearer objective is:

Identify which parts of onboarding create the most friction for new users.

With this clarity, every question becomes intentional, not random.

Align Your Questions To That Objective

Once your objective is defined, make sure each question directly supports it.

If a question doesn’t help you achieve the survey’s purpose, remove it. This avoids survey bloat, respondent fatigue, and irrelevant or unusable data.

For example, if your goal is to measure product usability, a question asking about brand perception doesn’t belong in the same survey.

Good surveys feel tight because every question has a purpose.

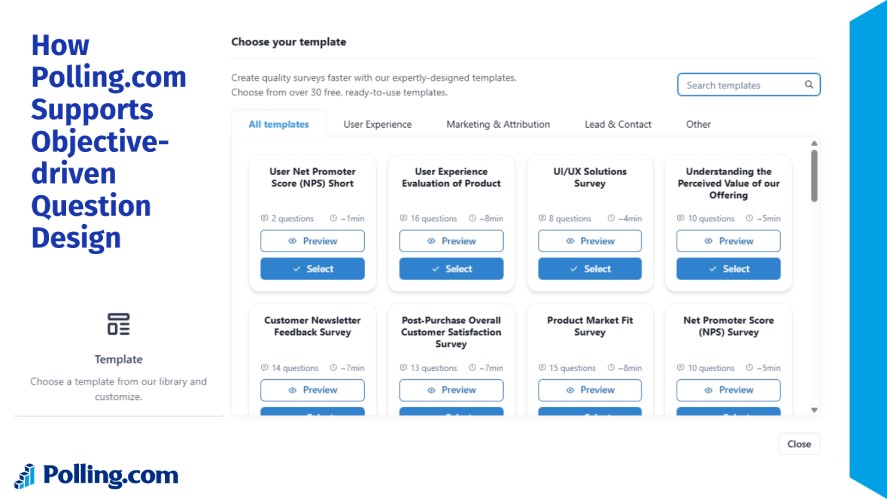

How Polling.com Supports Objective-driven Question Design

Polling.com helps you stay focused by guiding your question creation process:

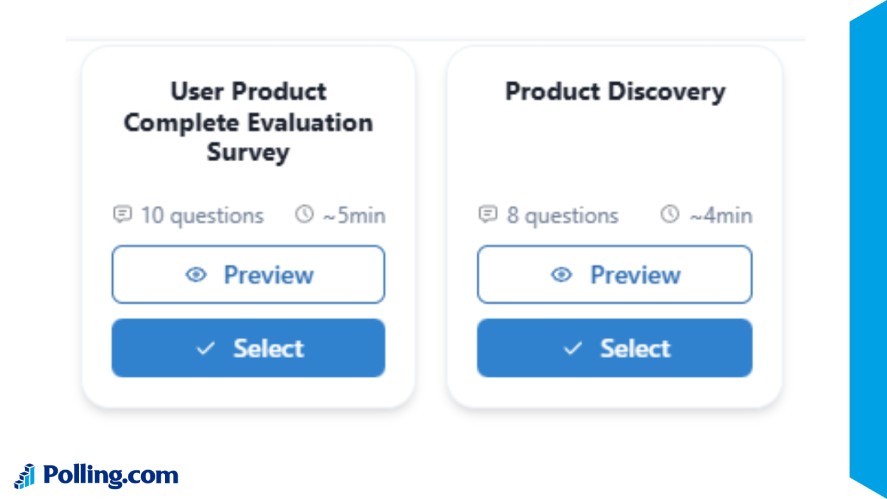

- Pre-built templates based on common survey goals (NPS, customer satisfaction, feature feedback, onboarding, etc.).

- Smart suggestions that flag unclear or biased wording.

- Simple logic and branching tools so you only ask relevant questions based on previous answers.

- Preview mode to see how your survey flows from a respondent’s perspective.

Instead of manually figuring out how to design a survey, Polling.com nudges you toward cleaner structure, clearer objectives, and higher-quality data—all with a free, beginner-friendly interface.

2. Know Your Audience and Choose the Right Question Format

Great survey questions aren’t just well-written; they’re written for the people answering them.

Your respondents’ background, reading level, and context all influence how they interpret a question.

Tailoring questions to your respondents ensures your data is accurate and helps answer the question: can surveys be reliable?

Consider Respondent Reading Level, Language, and Context

Before writing, ask yourself what are good survey question traits for your specific audience.

A good survey question should be instantly clear to the audience you’re targeting. That means:

- Avoid complex grammar or academic wording.

- Use familiar, everyday phrases.

- Match the tone to the respondent (e.g., customers vs. employees vs. users).

- Consider whether they’re answering on mobile, at work, or casually at home.

For example, a question like “How would you evaluate the cross-functional synergies of your onboarding experience?” will lose most respondents.

A simpler, clearer version would be: “How easy was it to get started with our product?”

Context shapes comprehension, and comprehension shapes data quality.

Closed-ended vs Open-ended: When to Use Each

Knowing which question format to use helps you learn how to write good survey questions for online surveys effectively.

| Closed-ended Questions | Open-ended Questions | |

|---|---|---|

| Use When | You need structured, quantifiable data | You need context, explanations, or deeper insight |

| Examples | Do you like this product? (yes/no) | What could we improve? |

| Best For | Measuring satisfaction, tracking trends, and comparing results | Identifying root causes, gathering feedback in respondents’ own words, and discovering ideas you didn’t think to ask about |

A good survey uses a combination of both: closed-ended questions for measurement, and open-ended ones for clarity.

How to Write Good Survey Questions For Different Audiences

Different groups need different approaches:

- Customers: Focus on clarity, usefulness, and emotional language.

- Employees: Be neutral, ensure anonymity, avoid sensitive wording.

- Users: Use product terms they already know; avoid technical jargon unless you’re surveying experts.

- General Public: Keep everything simple; avoid assumptions about knowledge or behavior.

The key rule is to write as if you’re speaking directly to the respondent, at their level, in their world.

3. Question Wording: Clarity, Neutrality & Simplicity

Wording is where most survey mistakes happen. Even small phrasing choices can introduce confusion or bias in surveys, which are two things that destroy data quality.

Good survey questions are short, neutral, and easy to interpret the same way by everyone.

Use Everyday Language & Avoid Jargon

Clarity beats cleverness. So, avoid wording that is overly formal, technical, academic, trendy, or ambiguous.

Instead, aim for simple, universal language that a 12-year-old could understand.

For example, instead of asking “How satisfied are you with the asynchronous communication affordances within our app?”, try “How satisfied are you with the messaging feature in our app?”.

The goal is comprehension, not complexity.

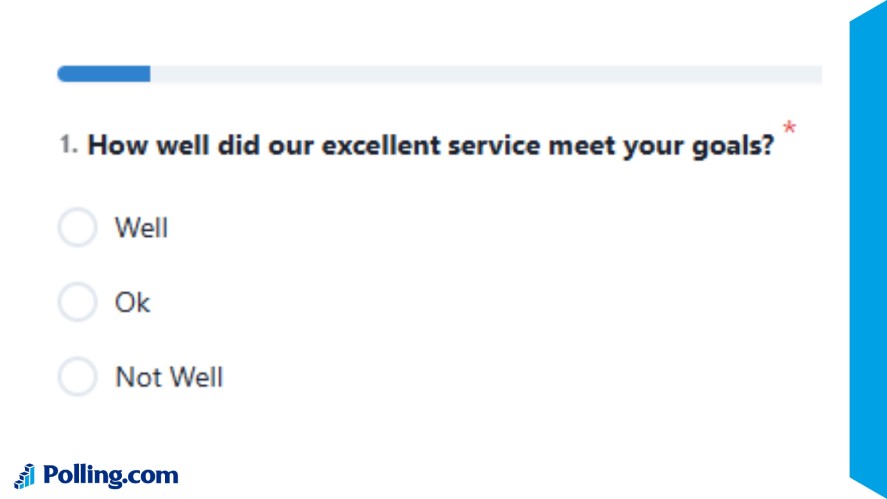

Avoid Leading or Loaded Wording

A leading question pushes people toward a certain answer. A loaded question contains an assumption.

Examples of biased survey wording:

- How much do you love our new update?

- Why do you prefer our service over competitors?

Better versions:

- How satisfied are you with our new update?

- Which company do you currently prefer, and why?

Neutral phrasing gives you real opinions, not the answers you subconsciously push people toward.

Cancel Bias By Balancing Scales and Offering Neutral Options

A skewed answer scale produces skewed results. For example:

Great/Good/Okay/Bad

This scale is biased because there are more positive options than negative ones.

A balanced version looks like this:

Very Satisfied/Somewhat Satisfied/Neutral/Somewhat Dissatisfied/Very Dissatisfied

Also include neutral or non-applicable options when appropriate. For example:

- Neither agree nor disagree

- I don’t know

- Not applicable

This prevents respondents from being forced into inaccurate answers, resulting in cleaner, more reliable data.

4. Avoid Common Pitfalls on How to Write Good Survey Questions

Even well-intentioned survey creators fall into a few classic traps. These mistakes lead to confusing answers, biased data, or results you can’t confidently use.

Here are the pitfalls to watch out for when writing good survey questions.

Double-barreled Questions

A double-barreled question forces respondents to answer multiple ideas with a single choice.

Example: “How satisfied are you with our pricing and customer support?”

If someone likes the pricing but hates the support, their answer becomes meaningless. To fix it, split the question into two.

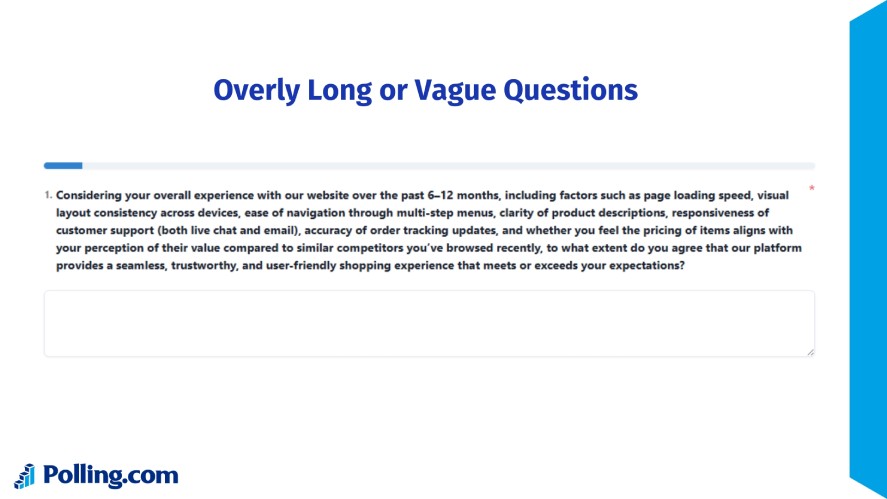

Overly Long or Vague Questions

When a question is too long, respondents lose focus. When it’s too vague, they’re not sure how to answer. Both issues lead to unreliable data.

For example, a broad question like “How do you feel about our overall performance in the last period?” doesn’t give respondents a clear time frame or aspect to evaluate.

A clearer approach would be: “How satisfied are you with your experience over the past 30 days?”.

By keeping questions short and specific, you make it easier for respondents to understand exactly what you’re asking, resulting in better, more accurate data.

Unbalanced Answer Choices or Missing “Not Applicable/Other”

If your response options don’t represent all realistic answers, you’re forcing bad data.

Examples:

- Offering only positive choices

- Not giving an “Other” for unique responses

- Leaving out “Not applicable” when needed

Good scales are balanced, fair, and inclusive.

Ordering Effects and Fatigue

Question order influences how people respond. Too many questions cause drop-offs or straight-lining.

The best practices for survey design are to start easy, group similar topics, keep the survey under ~5 minutes, and place sensitive questions at the end.

Shorter surveys get higher completion rates and cleaner, more thoughtful answers.

5. Answer Choice Design: Get the Scale Right

Writing good survey questionnaires isn’t just about the wording, as the answer choices matter just as much.

Well-designed options reduce confusion and eliminate bias, helping respondents choose accurately.

Balanced Scales (Equal Positives and Negatives)

A good scale gives equal weight to both sides. Example of a biased scale:

“Excellent/Good/Fair/Poor” (two positives, one neutral, one negative)

Balanced version:

Very Satisfied/Somewhat Satisfied/Neutral/Somewhat Dissatisfied/Very Dissatisfied

Balanced scales protect the integrity of your data.

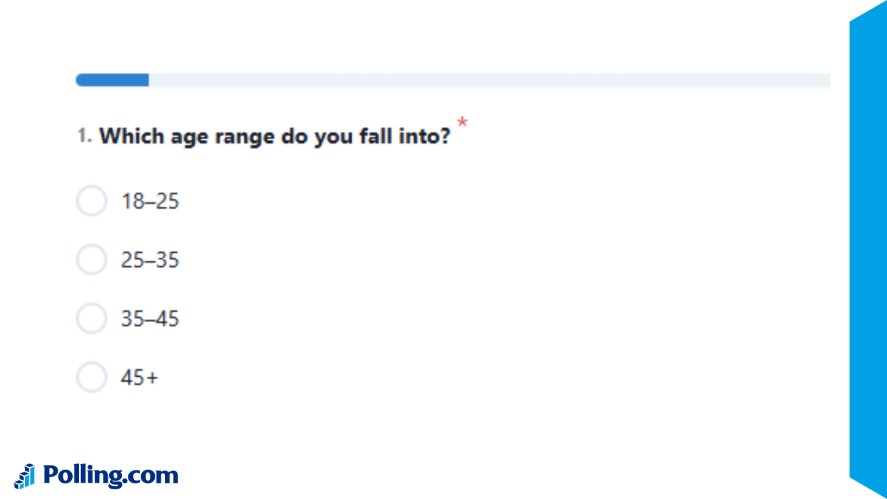

Mutually Exclusive and Exhaustive Options

Answer choices should never overlap.

For example, age ranges like: 18–25, 25–35, 35–45 are confusing, because where does 25 belong?

Fix by making ranges exclusive (18–24, 25–34, 35–44, 45+) and they should cover all realistic options so respondents don’t feel stuck.

Use “Don’t Know/Not Applicable” to Avoid Forced Errors

Sometimes the respondent truly doesn’t know the answer, or the question doesn’t apply.

If you don’t allow them to express that, they’ll either skip the question or choose something random.

So, adding options like: “I don’t know”, “Not applicable”, or “Prefer not to say” helps maintain data accuracy and reduces noise.

6. Layout, Flow & Pre‑testing the Survey

Even well-written questions can fail if they’re presented in the wrong order or without testing.

A thoughtful layout helps respondents stay engaged, reduces bias, and ensures you capture clean, reliable data.

Put Easier Questions First, More Sensitive Ones Later

Begin with simple, non-threatening items such as basic preferences or multiple-choice questions. This builds momentum and lowers the psychological barrier to continuing.

Once respondents are comfortable, gradually introduce more detailed or personal questions.

This structure improves honesty and reduces abandonment, as people are far more likely to answer sensitive items after they’ve already invested effort.

Keep Survey Length Manageable

Long surveys lead to drop-offs, straight-lining, and sloppy answers. So, aim for a concise set of questions directly tied to your survey objectives.

A good rule of thumb is five minutes or fewer, typically 8–12 well-designed questions.

If you need more depth, consider using branching logic to show follow-ups only when relevant, preserving the overall experience for most respondents.

Pilot Test Your Questions to Catch Confusion

A pilot test is one of the highest-ROI steps in survey design.

Have a small group, like internal team members or a small slice of your audience, take the survey while noting any confusion, missing options, awkward wording, or unexpected logic jumps.

Then, review time-to-complete, open-ended feedback, and drop-off points.

These early signals help you refine questions and layout before launching the full survey, saving you from unusable or misleading results.

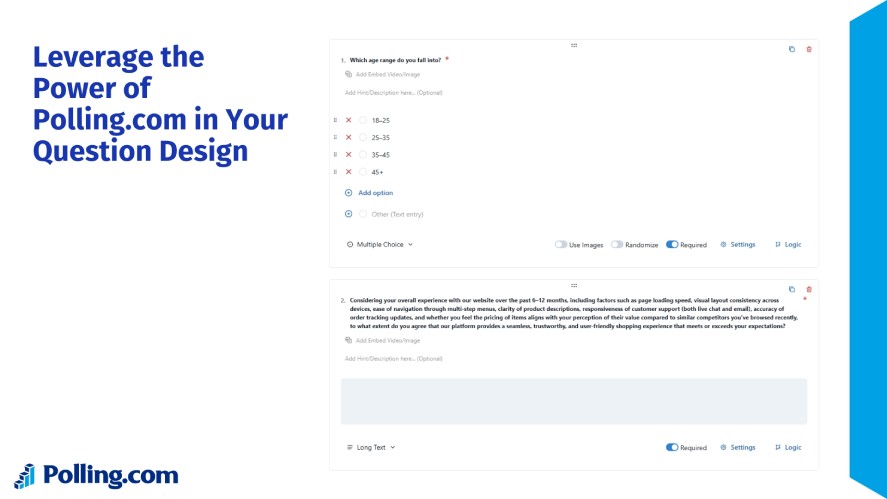

7. Leverage the Power of Polling.com in Your Question Design

Polling.com survey question tool makes good survey design easier, especially if you don’t have a research background. The tool offers:

- Smart templates and sample questions to help you start with proven best-practice structures.

- Intuitive drag-and-drop builder for creating clean flow and logical question order.

- Analytics dashboards that reveal drop-off points, confusing questions, and response patterns.

- Mobile-optimized layouts so questions stay clear and readable on any device.

Compared with generic free tools, Polling.com how to write survey questions doesn’t just let you host a survey; it helps you write better questions that produce reliable, actionable insights.

8. Post‑Survey Review: Evaluate Your Question Effectiveness

Once your survey goes live and responses start coming in, the next step is making sure your questions actually worked.

A post-survey review helps you understand which items performed well, which confused players, and which didn’t produce useful insights.

This process is essential for improving future surveys and ensuring your data stays reliable over time.

Check For Non-response, Straight-lining, Weird Patterns

Look closely at how respondents interacted with each question. High non-response rates usually mean a question was unclear, too sensitive, or too time-consuming.

Straight-lining (selecting the same option across a series of scale questions) can signal boredom, survey fatigue, or a poorly structured section.

Also watch for odd response patterns, such as players skipping an entire block or giving inconsistent answers.

These red flags reveal parts of the survey that may need restructuring.

Use Analytics to See Which Questions Underperformed

Analytics tools can show completion rates, time spent per question, and drop-off points — all invaluable when diagnosing weak survey design.

With tools like Polling.com free survey tool, you can quickly identify which questions players rushed through, abandoned, or interpreted differently than expected.

These insights help you pinpoint where friction occurred and what may require clearer wording or a different format.

Revise and Iterate For Next Time

A strong survey program is always iterative. Use what you’ve learned from your analytics to rewrite unclear questions, simplify complex ones, or reorganize the flow.

Improving even a few weak items can dramatically boost response rates and data accuracy.

Treat each survey cycle as a chance to refine and upgrade your question-writing approach so the next round captures more honest, actionable player insights.

Conclusion

Writing effective survey questions is a skill that directly impacts the quality of the data you collect, and ultimately the decisions your team makes.

When your questions are well-crafted, you get cleaner insights, more reliable feedback, and a much better understanding of what your audience truly wants.

If you’re ready to build a survey that players will actually complete, tools like Polling.com make the process smoother with clean layouts, mobile-friendly design, and built-in analytics.

Use it to create your next survey with confidence, and keep refining your approach with each round to maximize the value of every response.